The point of creating a survey campaign is to get reliable, actionable feedback. Unfortunately, that is not always what happens. And most of the time, it is the surveyor who is at fault.

How can you ensure that your survey is fair, unbiased, and contains questions that are easy for respondents to answer?

Read the guide to learn about survey response bias, the do’s and don’ts of crafting questions, and other best practices for collecting feedback.

What is Survey Response Bias?

Survey bias definition is as follows: a phenomenon that occurs when a sampling or testing process is influenced by selecting or encouraging one particular outcome or answer over others.

Survey response bias is a subtype and pertains to faulty design that encourages respondents to give a particular answer.

Other types of survey bias include sampling bias, non-response bias, and survivorship bias.

The results of a biased survey are unreliable data at best and a waste of marketing budget and misguided business decisions at worst.

Types of Response Bias

Survey response bias usually becomes apparent in one of the following six ways.

Extreme Response Bias

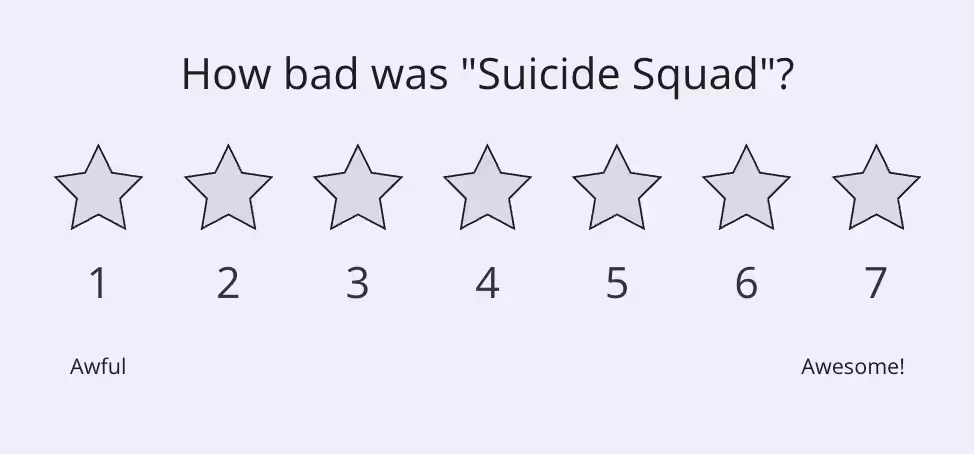

Some respondents will choose an extreme answer if the question is phrased to make it look like the “right” answer is an extreme one. This often happens with scaled questions (like ones using the Likert scale).

To avoid this, you should strive to make the question sound neutral and provide options to give a non-extreme answer. For example, include tiered responses like “sometimes.”

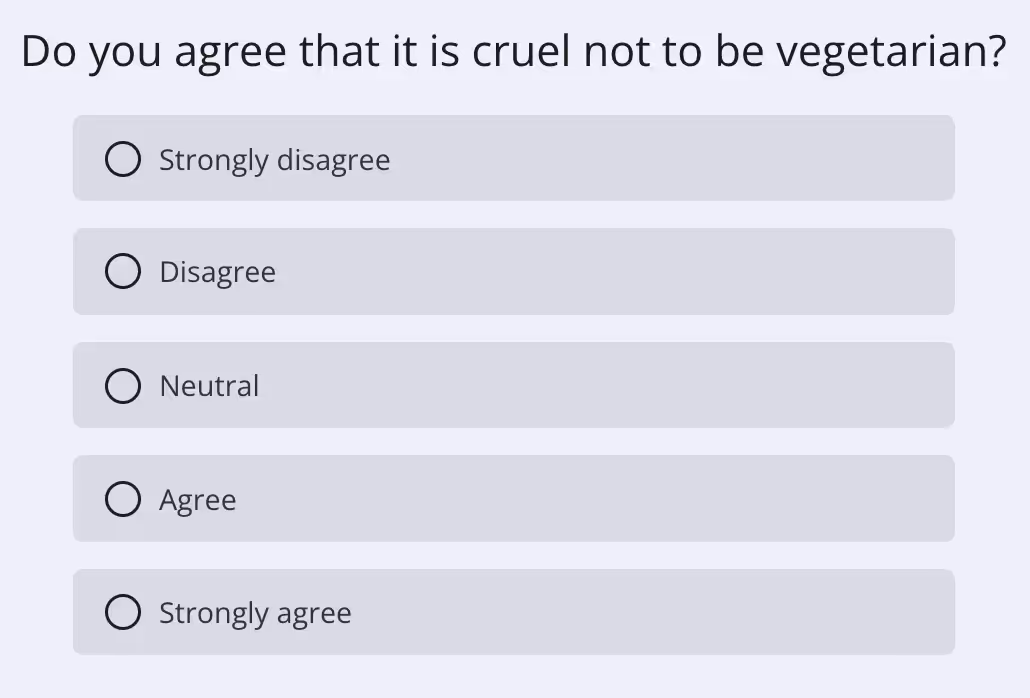

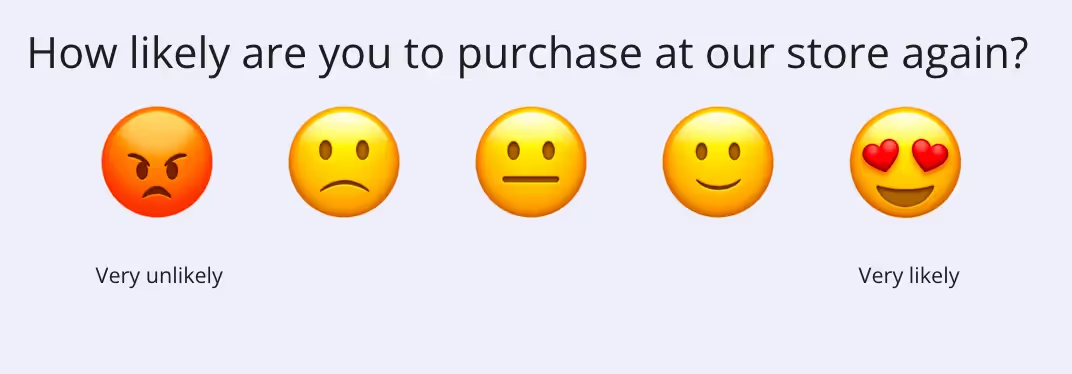

What’s more, do your best not to phrase the question in an emotive way, especially if you have a strong stance on the topic. For example:

The questions evoke emotion, and respondents are likely to agree or disagree strongly.

Extreme responding may also result from too many questions of the same type. If there are a lot of questions that use sliders or scales, answers may become increasingly extreme.

You may want to throw in some open-ended questions to gauge respondents' attention. They will have a more nuanced understanding of what they’re being asked about if they have to elaborate.

Neutral Response Bias

On the other end of the spectrum, we have neutral response bias. This is when respondents continually choose answers from the middle of the range.

The reason for this is often when the researcher includes irrelevant questions. As a result, respondents will choose neutral answers, giving false feedback that the topic is not an interesting one.

Survey respondents may also give neutral responses when surveys are too long (see survey fatigue) or due to sampling bias.

Acquiescence Bias

Acquiescence bias happens when respondents agree with the questions, despite this not being their stance on an issue. It usually stems from their perception of it being easier to agree or the reluctance to hold a disagreeable position.

To avoid this, you should make sure questions don’t ask the respondent to "confirm” something, and there should be a neutral response option.

It may also be that the survey is sent at an inappropriate time. For example, asking about customer service immediately after a purchase is complete may give you false results, as the participant did not have an interaction with your customer support team.

They may be inclined to praise the CS team as they are excited about their purchase.

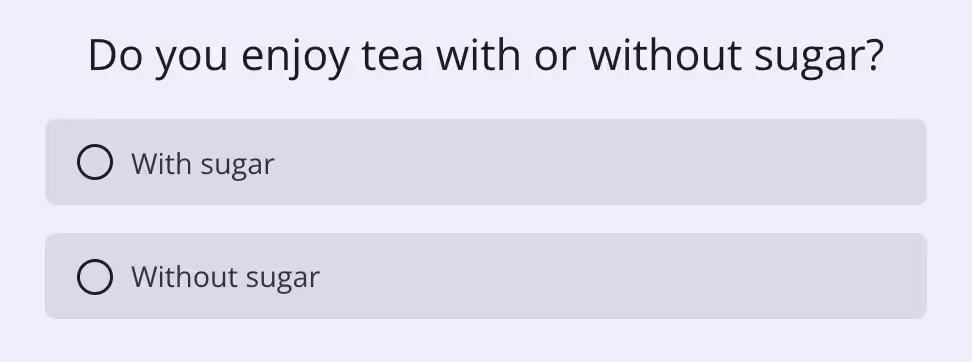

Don’t include assumptions within your question. For example:

Presumes that the respondent enjoys tea. It doesn’t give them wiggle room to let you know that tea is not their beverage of choice.

Similar to extreme response bias, acquiescence bias may also result from too many questions of a similar character being asked one after another. This may even lead to contradictory responses.

That’s why you shouldn’t overuse a particular question type.

Order Effects Bias

Be particular about your question order. If you plan it inefficiently, you may get incorrect answers, especially if participants try to avoid being inconsistent. Make sure your question order doesn’t suggest a “right” way of filling out a survey.

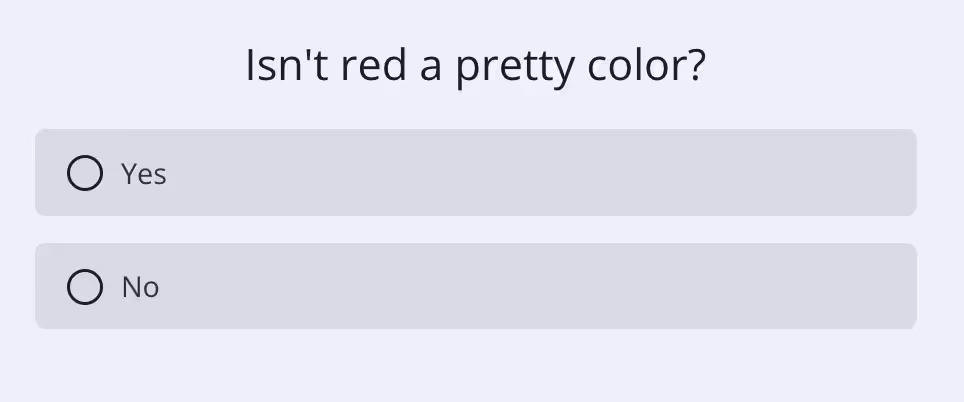

For example, this question:

Shouldn’t be followed by one such as this:

Because it may lead the answer to be positive. The respondent will strive to be consistent with previous answers instead of giving each question the focus it deserves.

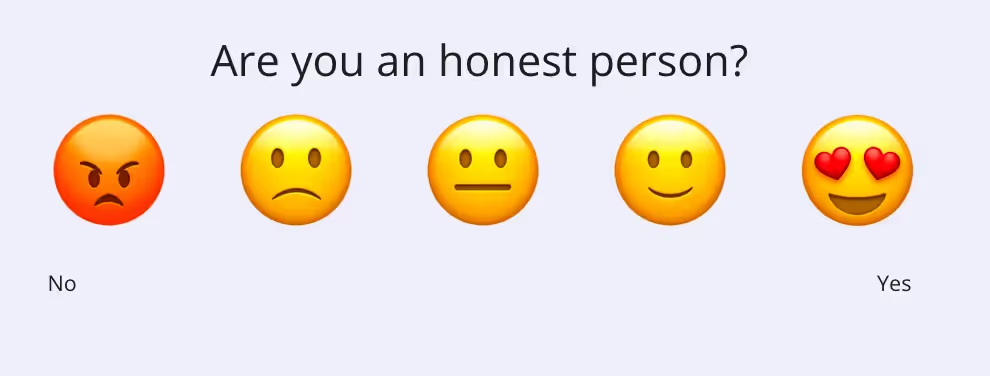

Social Desirability Bias

Respondents may want to appear more socially desirable or attractive to the interviewer, resulting in them answering uncharacteristically or lying to appear in a positive light.

The issue could be due to the researcher's choice of topic, or it could be that the participants feel insecure or uncomfortable with the topic, which affects their answers.

Confirmation Bias

When researchers evaluate survey data, they may be inclined to look for patterns that confirm their existing beliefs or hypotheses, even if the data doesn't actually support those conclusions. This can lead to inaccurate or misleading results.

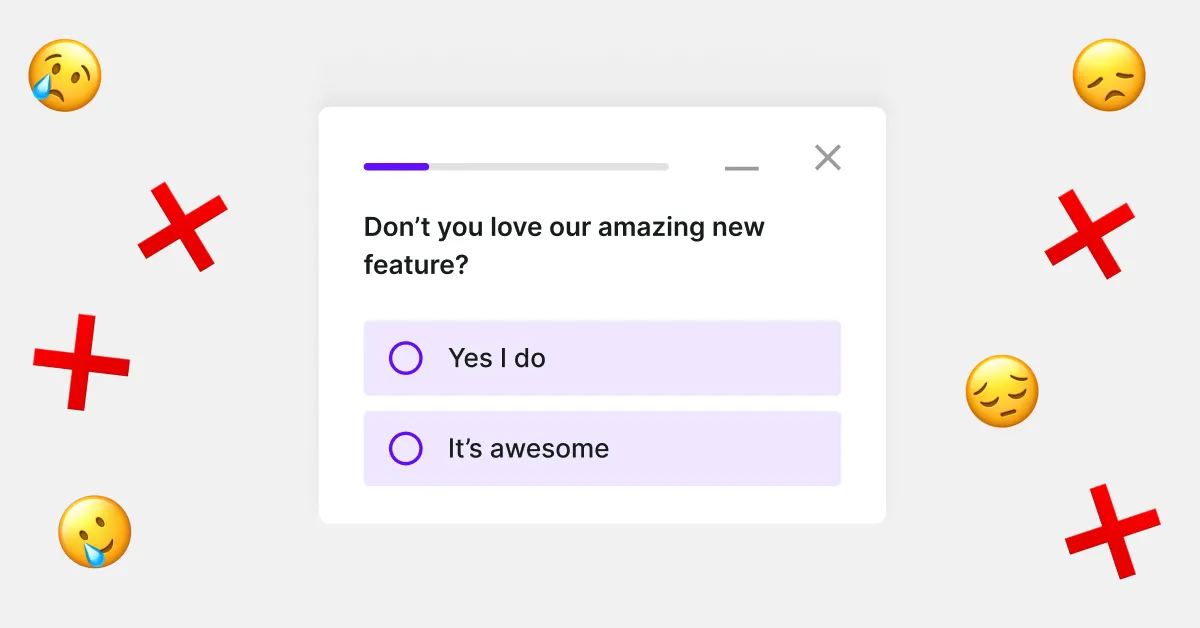

For example, including a survey along the lines of

As a pop-up on the homepage makes no sense since the only people who see it are the ones who are on the site already. While these may be truthful responses, they do no more than give the surveyor “a pat on the back.”

Response Bias vs. Nonresponse Bias

Non-response bias happens as a result of certain respondents not filling out surveys.

This could be due to sampling bias but may also be caused by using inefficient channels. For example, sending a survey out to a bunch of inactive email addresses. If you are looking for a reason why subscription plans were canceled, you may find yourself surveying people who no longer use the emails in your database.

Another example: most restaurant reviews are biased toward people who had a very good or very bad experience. This is because those customers are more likely to leave a review than those who had a neutral experience.

Similarly, people who have a lot of knowledge about a subject are more likely to participate in a survey about that subject than people who don't know much about it.

If your survey response rate is low, you may be looking at non-response bias. Those who do respond may be doing it due to, for instance, higher levels of satisfaction. You are missing out on valuable data and insights from respondents who opted out.

Imagine you wanted to survey your employees about the workload. Those who have time to fill out the survey will likely have fewer tasks and responsibilities. This gives you a non-response bias because those who are overworked will not have the time to give you feedback.

This can be partly solved by information and incentive. Create an introduction to your survey, letting respondents know that it is in their interest to respond to make a process or product better or more efficient.

How to Avoid Survey Response Bias?

Each person’s opinion, both respondents’ and surveyors’, is subjective and depends on many factors. This means eliminating survey response bias entirely is impossible. But there are several things you can take into account to minimize it.

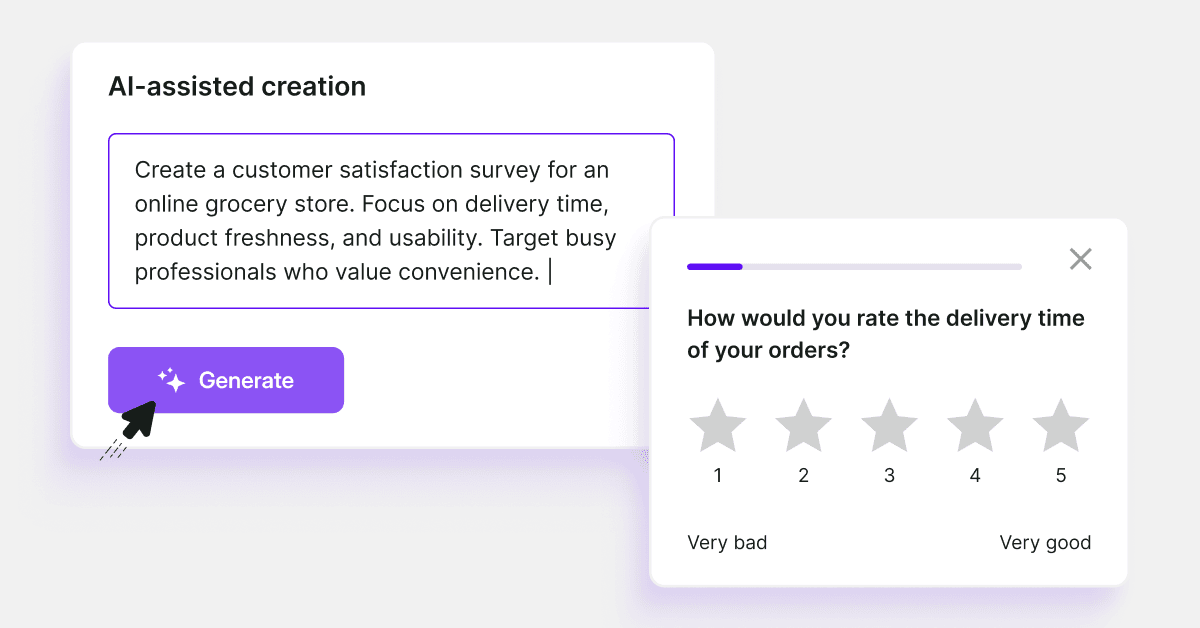

If you want to skip the survey design stage, use one of Survicate’s survey templates. They were designed to eradicate survey response bias as much as possible.

But, especially if you are creating a highly custom survey, you may have to build it yourself. Let’s dive into some best practices.

Avoid the Following Types of Questions

These are the most common types of questions that result in unreliable feedback.

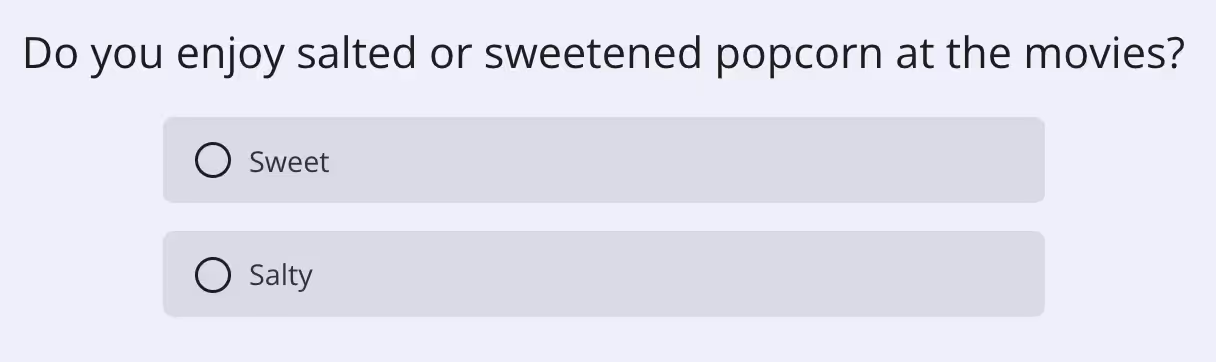

Loaded Questions

Do not use any questions with assumptions. For instance

Assumes that the respondent enjoys going to the movies and has snacks at the cinema.

It would be better to create three questions

- Do you enjoy going to the movies?

- What type of snack do you like at the movies?

- Do you prefer sweet or salty popcorn?

And make use of skip logic. You may also want to include an “other” option in the second question, as you are unlikely always to exhaust all the possible responses.

This way, there is less pressure on the respondent to answer a question in a particular way.

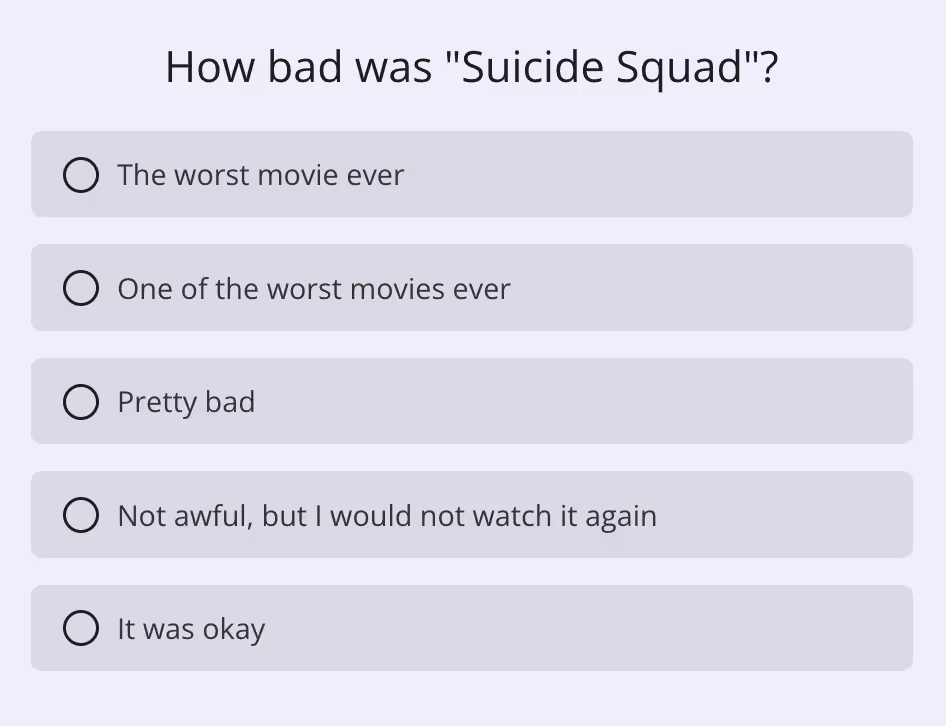

Leading Questions

A leading question “suggests” there is a right answer. If you ask

You suggest to the respondent that the movie was bad. But this can also happen with scaled questions. For example, if you provide more “satisfied” options, the responses will be biased.

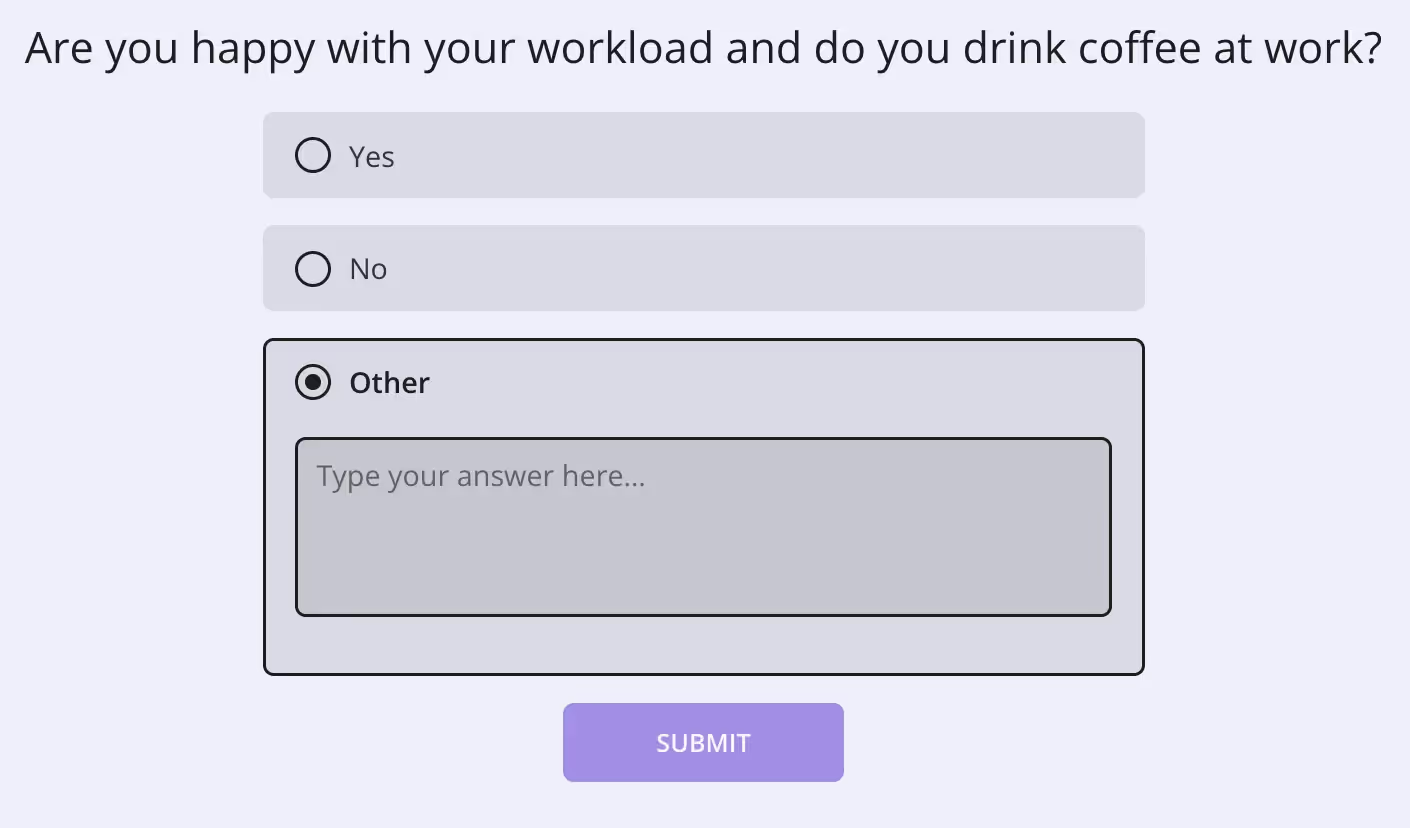

Double-Barreled Questions

Double-barreled questions are asking for more than one thing.

Even if you provide the option to answer in text form, this will make analysis very difficult. Split the question into separate ones that would be easier in a closed-ended format. This can also help minimize the risk of survey fatigue.

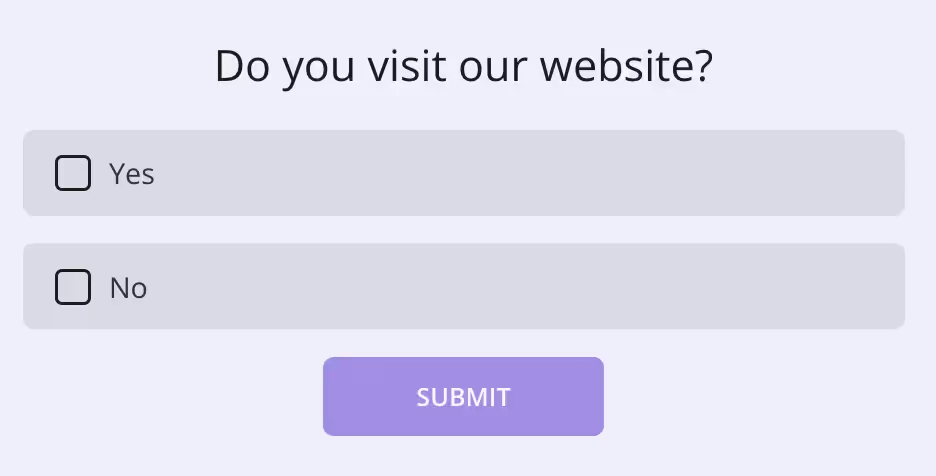

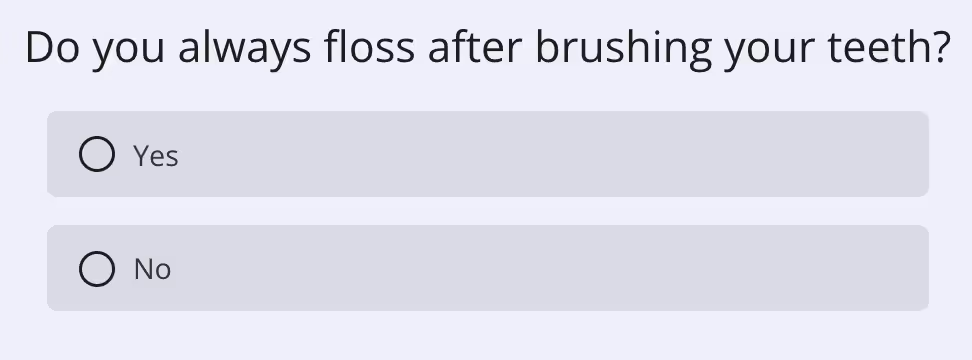

Absolute Questions

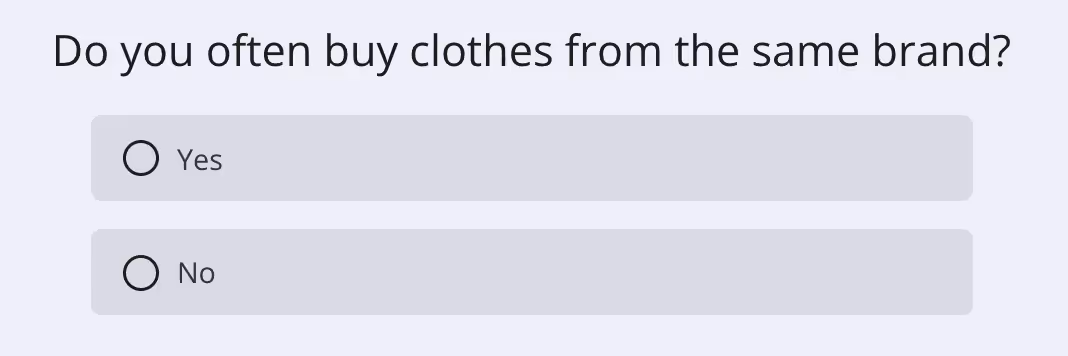

You can think of absolute questions as “yes or no” or “always or never” questions. Always include an in-between option.

This is not only because there is rarely a situation when someone can absolutely say “always” or “never,” but respondents in the same situation might give you different answers due to subjectivity.

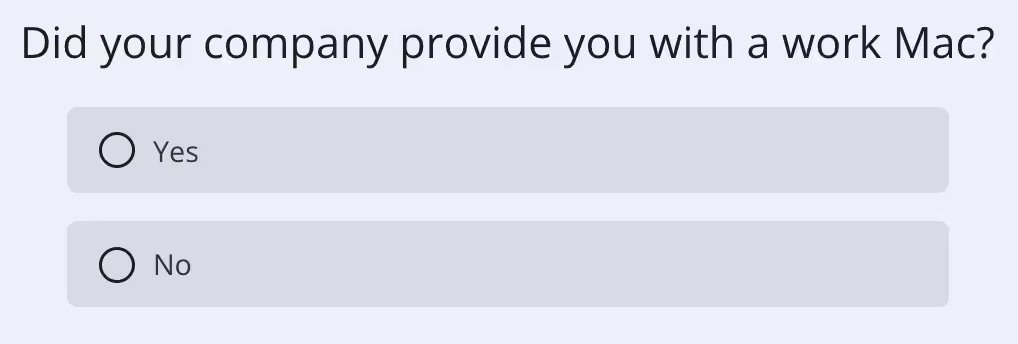

For example:

Could give inaccurate data since most people don’t floss every single day. One may think, “well, I don’t always floss, but I try to,” and select “yes,” while someone else may think, “well, no, not every single time,” and select no.

What’s more, this may also make respondents think the survey was designed poorly and neglect to give it proper attention or even abandon it.

Try to use a scale or multiple-choice questions, especially when asking about habits or routines.

Unclear Questions

Unclear questions can tire and confuse respondents, making them feel like their feedback won’t be helpful. Avoid technical terminology, abbreviations, and acronyms that respondents may not be familiar with.

Try not to be too specific either. For example, don’t ask questions like:

As some people may have received a different PC than Apple.

Stereotype Questions

Don’t ask respondents to identify themselves with gender, race, ethnicity, or age unless absolutely necessary.

Give the Option Not to Answer

There will almost always be someone for whom a certain question is inapplicable or uncomfortable. Respondents may answer untruthfully or opt out of the survey altogether.

The solution is to include a “prefer not to answer” option.

Provide All Possible Answers

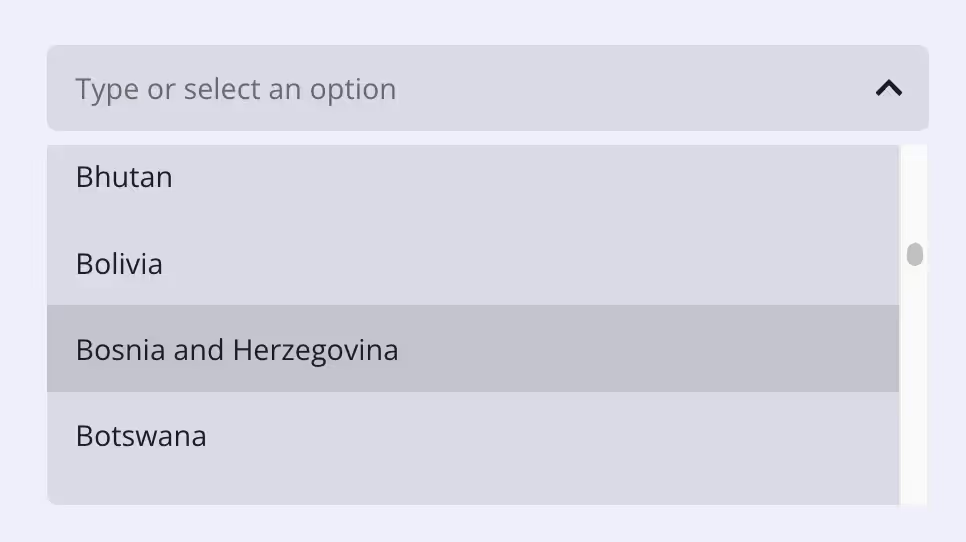

Do your best to include all possible options with multiple-choice and single-choice questions. If you are unsure, an “other, please specify” option is always a good idea.

Sometimes it makes more sense than listing every option you can think of. If you wanted to know which country your respondent is from, you’d have to make an insanely long list that could mess with the survey's design.

With Survicate, you can choose a dropdown question that is more aesthetically pleasing.

Leave the Demographic and Personal Questions for Later

Starting off very personal may steer respondents away from completing a survey. You can ask for demographic information at the end or do your best to gather this data via integrations. Avoid superfluous questions at all costs.

Ask Someone Else to Review Questions for Bias

Before making your survey public, ask team members or other colleagues to review the questions for bias. A third-person perspective or peer review is key to any feedback campaign.

Provide Incentive to Complete the Survey

Let your customers know what they gain if they decide to heed attention to reliable feedback. Inform them how important their answers are to you and that you plan on using the data to make their experience with your brand better.

But be careful. You don’t want your incentive to skew answers either.

Make sure the incentive is available to all respondents, not just the ones that complete the survey in a particular way.

Resurvey Non-Respondents

Most people will fill out a survey if they have the opportunity to do so when they receive it. Think about it, how often do you go through old emails looking for surveys you can complete?

In case someone was willing to give feedback but just didn’t have the time to respond the first time, resurvey non-respondents. It won’t take a lot of effort on your part, and you may end up with some interesting insights.

Ready to Create Your Unbiased Survey?

Don’t waste your marketing budget on gathering unreliable results. Do your best to design the most objective and neutral survey you can. Use our templates for customer experience and satisfaction campaigns, as the work is already done for you.

Survicate offers dozens of ready-to-use templates for your most common survey needs, such as NPS, CSAT, CES, and many more. They are fully customizable; you can add your own colors and branding (and even remove ours).

Each question can have its individual logic and be modified with new answer options. You can also add your own social media handles at the end.

Elevate your decision-making with valuable customer feedback insights. Join Survicate for free and access essential features during our 10-day trial. And don't miss our pricing.

.svg)

.svg)