I’ll say it – surveys are one of the best ways to learn from your customers and leads. Period. They can reveal why people churn, what they love (or don’t) about your product, and spark ideas for improvement.

Still, getting enough high-quality responses is often harder than it seems. People skip questions, abandon surveys halfway, or give answers that don’t quite help. The difference between a survey that works and one that flops often comes down to survey psychology – understanding how people think, decide, and respond.

In this post, I’ll break down how to use survey psychology to design surveys that get more responses and yield better data.

Inside the mind of a survey taker

There are four cognitive steps respondents go through when answering a survey question. These are comprehension, memory retrieval, judgment, and response selection. Before we dive into tips, it helps to know what happens in a person’s head when they take a survey.

When someone sees a question, they first interpret its meaning (comprehension), then search their memory for relevant information, form a judgment based on what they recall, and finally map that judgment onto the available answers.

It’s a complex mental process that can quietly sabotage your data if you’re not careful. For example, if a question is unclear or uses technical jargon, the respondent’s comprehension can falter. Someone might not be sure whether “formal educational program” includes short professional courses or only full-time degrees, and their answer ends up meaning something different than what you intended.

Memory is another bottleneck. People struggle to recall specifics, and their brains take shortcuts, especially under time pressure or cognitive load. On top of that, biases creep in during the judgment and response steps.

Respondents often adjust answers based on social desirability (trying to look good) or what they think you want to hear. Even the layout of answer choices (say, vertical vs horizontal scales) can nudge people toward certain options.

Why am I telling you all of this? To help you realize that survey design is about creating a smooth mental experience. You need to align your questions with how people naturally understand and decide, minimizing confusion, memory strain, and unintended bias.

Keep this psychological backdrop in mind as we move into concrete design strategies.

How to run surveys to secure higher response rates

Enough theory! Let’s now take a look at how these survey psychology rules can be used while designing surveys.

Wording matters – ask questions people can answer

Words matter – a lot. Even small phrasing tweaks can change how people respond. Your questions should be easy to understand and free of bias. A good rule of thumb is the BRUSO model: make questions brief, relevant, unambiguous, specific, and objective.

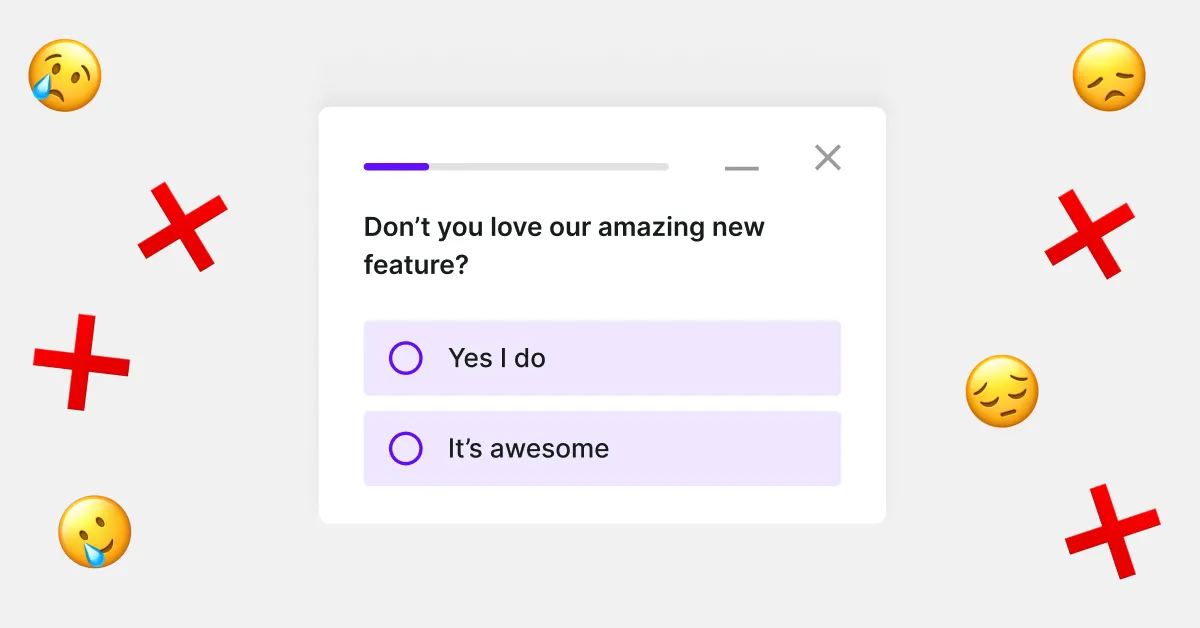

This means cutting out jargon and big words, sticking to one idea per question, and not hinting at a “right” answer. For example, avoid leading language – compare “What do you enjoy about our product?” versus “Don’t you just love our product features?” The first is neutral; the second is subtly pressuring for praise.

Likewise, watch out for double-barreled questions that ask two things at once, e.g., “How satisfied are you with our website and customer service?” Respondents won’t know which part to answer, and your data will be muddled. If you need both pieces of info, split it into two questions. Clarity is especially crucial for an audience that isn’t full of research scientists – if a customer success manager has to reread your question, it’s too complex.

Good example ✅ “What do you enjoy about our product?”

Bad example ❌ “Don’t you just love our product features?”

Tip: Avoid leading language that pressures respondents.

Here’s a famous example of wording impact. Researchers found that a group of U.S. voters reacted differently to the term “global warming” (less favorably) vs “climate change” (more favorably).

People report different levels of concern depending on which term is used. “Global warming” tends to evoke stronger emotional reactions and partisan divides compared to the broader “climate change.”

Choose words carefully to ensure they mean the same thing to respondents as they do to you. And whenever possible, keep questions short and simple – long, convoluted questions increase cognitive burden and drop-offs .

Keep it short and relevant

When it comes to surveys, shorter is usually better. Professionals in CX or product roles are busy – and even motivated customers have limited attention spans. Every extra minute (or question) you add increases the chance of drop-offs.

Research shows that once a survey exceeds about twelve minutes on desktop or nine minutes on mobile, respondent drop-off rates rise sharply. It can be tempting to include “nice-to-know” questions, but if they don’t directly support your main objective, it’s best to leave them out.

Katie Jones, Owner of Squirrel Gifts, emphasizes the same point from hands-on experience. “Lengthy questionnaires feel like work, which provokes people to leave or skim over their responses,” she explains. Her team tested this by cutting their twelve-question survey down to five concise, conversational items. “Every question was in easy, inviting language,” she says, “which made them feel more like a chat with our shop staff than a formal questionnaire.”

They kept just one open text box for additional comments, which led to “more thoughtful replies.” Within six months, completion rates jumped from 28.5% to 61.2%, and the open responses became far more detailed. As Jones concludes, “This improvement showed how brevity and ease of style had a direct bearing on responses.”

The previously mentioned research supports her findings. Completion rates drop significantly when a survey contains more than three open-text questions. While open-ended items can provide rich insights – and tools like Survicate can analyze them at scale – they also require more effort from respondents. Consider converting some open-ends into multiple choice (with an “Other, please specify” option) to make them faster to answer.

Another smart move is to use existing data instead of asking redundantly. For instance, rather than asking “How did you hear about our product?”, you might already have referral or campaign data in your system. Every question you remove is one less chance for survey fatigue. Keeping the survey focused and succinct not only improves response rates but also signals to respondents that you value their time – which boosts trust and completion.

Question order matters

One of my favorite tips? Think of surveys as a structured conversation. You wouldn’t sit down with someone you barely know and immediately ask, “so, what’s your annual revenue – and oh, how old are you?”

That would be a guaranteed shutdown. The same principle applies to question sequencing. A survey should ease people in, starting with light, relevant questions that help them settle in and build a bit of confidence.

These could be about something recent or opinion-based. If you can tap into what they’ve just experienced (for example, in your product) or what you know they care about, you’re far more likely to keep them with your questionnaire until the end.

Once they become invested in the survey, you can gradually move into more detailed or reflective territory. Also, I can’t underline strongly enough – save the personal or sensitive questions related to demographics for last. By that point, they will have seen the value of the conversation and might be more comfortable sharing.

Tom Jauncey from Nautilus Marketing told me that his agency follows this approach. “We include some captivating, easy-to-do questions before opening more reflective options,” he said. By signaling early progress through tone, language, and subtle pacing, they saw a 30%+ lift in completion rates. Also, they started collecting more answers to open-ended questions.

See how this funnel approach mirrors trust-building in real life? Start general, then narrow in. It’s smoother for the respondent and better for your data.

Beware: question order can affect answer accuracy, too

There’s one thing that might affect the above approach and force you to deter from this easy - medium - hard questions’ approach. It’s the fact that people tend to apply recency bias to the questions they answer.

If you ask about something that evoked negative feelings, this might affect the person’s next few responses, while the emotions are still strong.That’s why some research teams, like those at Pew, randomize answer choices or even entire blocks of questions to reduce the risk of bias. If you feel that two questions could sway each other, consider how their order affects the story you’re asking someone to tell.

Design for attention – make it visually easy

The way your survey looks, flows, and responds on different devices determines how easily people move through it. Proper formatting, generous white space, and clear visual hierarchy help respondents stay focused on what you’re asking – not on how to get to the next question.

You must also remember that most people won’t be sitting at a desk when they take your survey. They’re on a phone, half in transit, half in another task. So if your scales are squished, the buttons are tiny, or they need to pinch and zoom just to read, they’ll drop off. Testing your survey on mobile devices is simply about respecting your respondents' time.

What I find particularly noteworthy is that presentation form affects the answers themselves. A vertical Likert scale can push people toward stronger opinions, while a horizontal one tends to pull answers toward the middle.

And if your options always appear in the same order (and your survey is in a left-to-right alphabet), that left-side bias will kick in. People will naturally gravitate to the first thing they see.

Going past the first question – expert tip

A great example of a “smart” survey flow comes from Barbara Robinson, Marketing Manager at WeatherSolve Structures. She told me that she always makes sure that the very first question is a one-click, binary option. “It should appear right on the screen, not behind a ‘Start Survey’ button.”

Their opening question is as simple as “Yes” or “No”. The second a person taps on their answer, the progress bar jumps to 25%.

That tiny, instant win changed everything. As Robinson put it, “this visual and quantitative pleasure for little effort” gave respondents a sense of progress from the start. Over twelve months, they saw a 35% increase in completion rates on a five-question survey. Not because it was shorter, but because it felt achievable.

When you’re building longer surveys, those little encouragements matter.

Create a safe space for honesty

Most of us, without even realizing it, try to sound a little better or give the answer we think is expected. That’s social desirability bias at work. If you want honest feedback, you have to create an environment where people feel safe being honest.

Start by assuring people their responses are anonymous or confidential and be clear about what that actually means. A line like “Your responses are anonymous and won’t be linked to your identity” sets the tone.

If you can’t promise full anonymity (say, the survey is tied to an email), explain how answers will be used and that no individual will be singled out. When people trust the process, they open up.

Tone plays a huge role here, too. A follow-up like “Explain why you gave a low score” could feel defensive, like you're calling out a person who gave you a negative score. But if you turn that into “Could you share a bit about what led to that score? We’re looking to improve”, then that’s a different story. It’s transparent and appreciative, and that can turn a reluctant respondent into a willing one.

Emily Ruby, Attorney and Owner of Abogada De Lesiones, makes this a core part of her approach. “In the survey invite, I thank the respondent for their time, whether they take the survey or not,” she said. She also explains how feedback will be used to improve the customer experience, making it clear there's value in participating.

What’s most interesting in my opinion, is that she didn’t change a single question in the survey itself! The only change was the invitation message. Within 60 days, response rates jumped by 21%. That’s the power of trust.

Timing is everything

One thing nearly every expert I spoke to brought up was timing. Not timing in a “technical sense”, like 8 AM or 8 PM. This can work, too, if you know that’s when your potential survey respondents are most active online. Here, however, I’m referring to timing in a “human” sense. Ask someone for feedback while they’re still in the moment, and you’ll hear the truth. Wait too long, and what you get is a polished memory or a lack of response altogether.

Lital Lev-Ary, Founder at Light Touch NYC, used to send feedback surveys 48 hours after appointments. She told me that the response rate was low, i.e., around 12%. The answers also felt distant. They were polite, but “flat”.

Then she moved the question to within 90 minutes of the session, sent via text: “How did your skin feel right after treatment?”

Suddenly, the response rate jumped to 61%, and clients got more specific, explaining real sensory detail like itching or redness, which would’ve faded by the next day.

That single timing shift gave her something else too: emotion. She could identify anxiety triggers, fine-tune device intensity, and even improve pre-treatment conversations.

The same mechanism worked in a completely different field. Founder at Web Design Las Vegas, Athena Kavis, said that she has launched over a thousand websites, and noticed that feedback quality always changed depending on when she asked her clients to fill out a survey.

Unlike Lev-Ary though, instead of sending one long survey, she decided to break it down into two or three tiny micro-surveys. Each one was sent at an emotional milestone. One shortly after a site she designed went live, and another after the first 100 orders through the page.

Completion rates from these extra-short surveys rose from 31% to 78%. More importantly, the responses also got richer. Right after launch, Kavis’ clients wanted to celebrate or vent. After 100 orders, they were reflective and ready to talk about what actually drove sales. The timing made the question feel relevant.

Both of these examples show that you don’t just collect feedback but catch it. If you ask while the experience is still warm, you’re more likely to get unfiltered, true-to-life opinions.

Treat surveys like conversations – not checklists

There’s no one-size-fits-all shortcut to getting high survey response rates. If you want feedback that actually tells you something valuable, you need to understand how people think, be empathetic, and ask clear, unbiased questions.

Everything else – making sure the layout is friendly, capturing partial answers, and keeping respondents on track – can be handled by a platform like Survicate. It breaks surveys into individual screens, saves every answer (even if someone drops out!), and makes the whole experience feel effortless for the respondent.

When you combine your human-centered focus with the right tool, running surveys will stop feeling like a chore and generate precise, honest insights you can trust.

.svg)

.svg)