TL;DR

- AI works best for mechanical tasks like organizing data, creating templates, and searching through large volumes of content, but struggles with nuanced interpretation and relationship-building.

- I tested AI across my entire research workflow and found that some tools saved hours of work while others created more problems than they solved.

- The sweet spot is using AI to eliminate busywork while keeping strategic thinking, contextual interpretation, and human connection firmly in researchers' hands.

- We're in an experimental phase—test systematically in your own context rather than automating everything or dismissing AI entirely.

There's immense pressure right now to use AI and optimize everything. It's easy to get caught up in the excitement and try to automate every single step of your research process. The key, though, is choosing the right tasks to automate – cutting manual work without sacrificing the quality and nuance that makes qualitative research valuable.

Over the past year, I've tested different AI solutions across my qualitative research workflow. Some saved me hours of tedious work. Others created more problems than they solved. I'll share what I learned, what's actually worth implementing, and what you should keep firmly in human hands.

What makes qualitative research feel endless?

Before diving into AI solutions, let's be honest about what makes qualitative research feel so time-consuming. There are several steps where AI tools naturally tempt us to automate:

- Taking notes during user interviews

- Running the actual interview

- Analyzing transcripts and notes

- Searching and comparing notes across sessions

- Creating materials for stakeholders

By experimenting with AI at each of these stages, I wanted to find out which tasks truly benefit from automation, and which should be conducted by researchers for now.

My AI experiments in the research process

I tested AI at various stages of research during real-world projects, utilizing a range of tools along the way.

- ChatGPT – no explanation needed here, a language model that handles different tasks depending on the prompts.

- Survicate – especially the Insights Hub, which helps classify incoming qualitative data and generate insights, and the Research Assistant, which lets you ask questions about your users based on the data from Survicate surveys and connected sources. It is also possible to generate surveys with AI.

- NotebookLM – Google’s tool that supports searching and exploring uploaded materials.

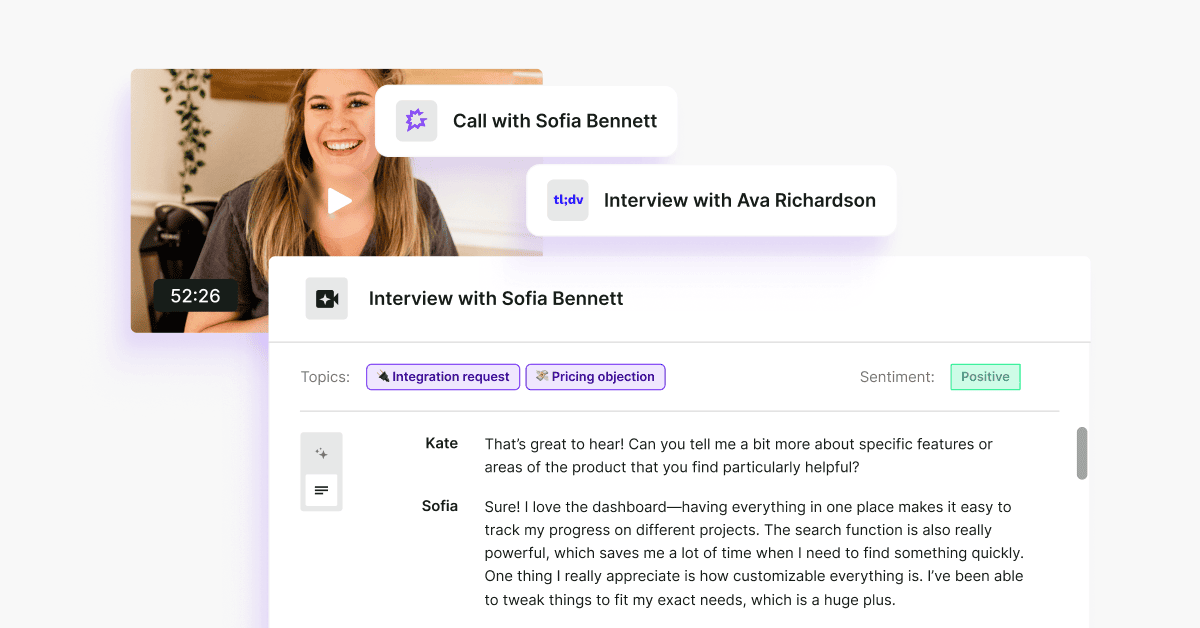

- Tl;dv – a tool for creating transcripts and notes.

Creating a research script

One of the first steps in any research project is planning it and creating a script.

When testing AI for script creation, I first asked it to generate questions based on my own research goals. What I got back was more of a set of scenario questions than a finished script. Some were too direct and risked hinting at the “right” answers.

Still, the experiment gave me a starting point. I kept some questions, rephrased others, and added a few of my own. It helped me avoid the dreaded “blank page” syndrome- staring at an empty document, unsure where to begin.

Running the research

Conducting research is about more than just asking questions and collecting clean, emotion-free data. It’s about building relationships, gaining trust, and showing genuine interest. That’s why, in my view, interviews and other relationship-driven methods lose much of their value when run entirely by AI. They also remove an important part of what makes research fulfilling for the researcher.

On the other hand, some methods don’t rely on personal connection – what matters most is gathering reliable, consistent data. This is where AI support can be useful.

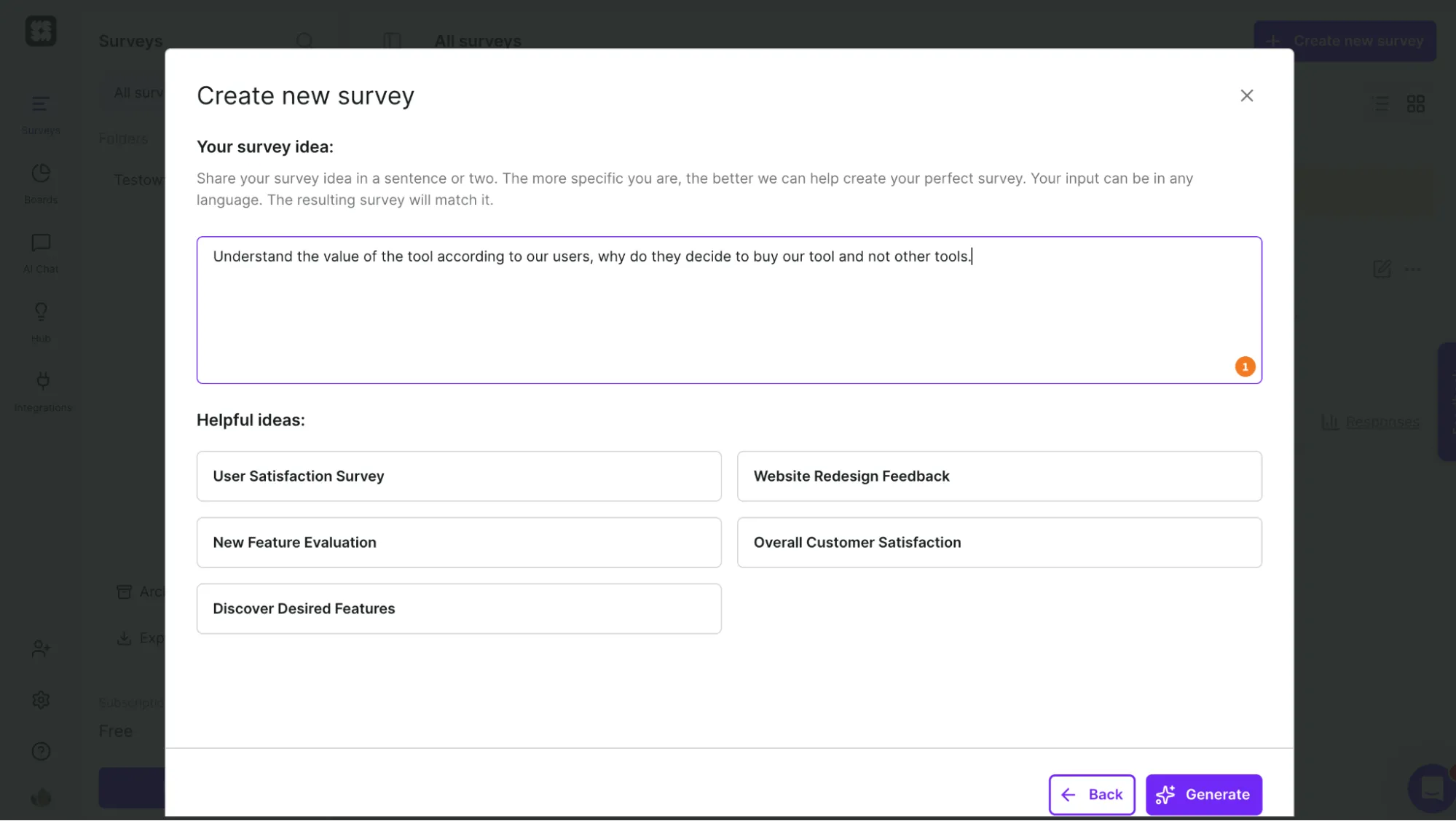

Take surveys. Designing good survey questions that don’t push participants toward a “correct” answer can be challenging. That’s where Survicate helps. Based on a prompt where you describe what you want to learn, it generates a complete survey, choosing the right question types (open-ended, closed, scale-based, etc.) for your goals.

Just like with script creation, you’re not starting from a blank page. You also save time by not building every single question and answer from scratch. Instead, you get a ready-made template tailored to your needs, which you can adjust further if necessary.

Taking notes around research questions

AI-generated notes often leave much to be desired – they capture the data but usually miss the emotional undertones, moments of surprise, or direct links back to the original research questions. That’s why I always add my own comments and observations on top of AI notes.

Another limitation is structure. AI tends to organize notes according to the flow of the interview script, simply mirroring the order in which questions were asked. But what really matters to a researcher is tying insights back to the research questions, which don’t always appear directly in the script.

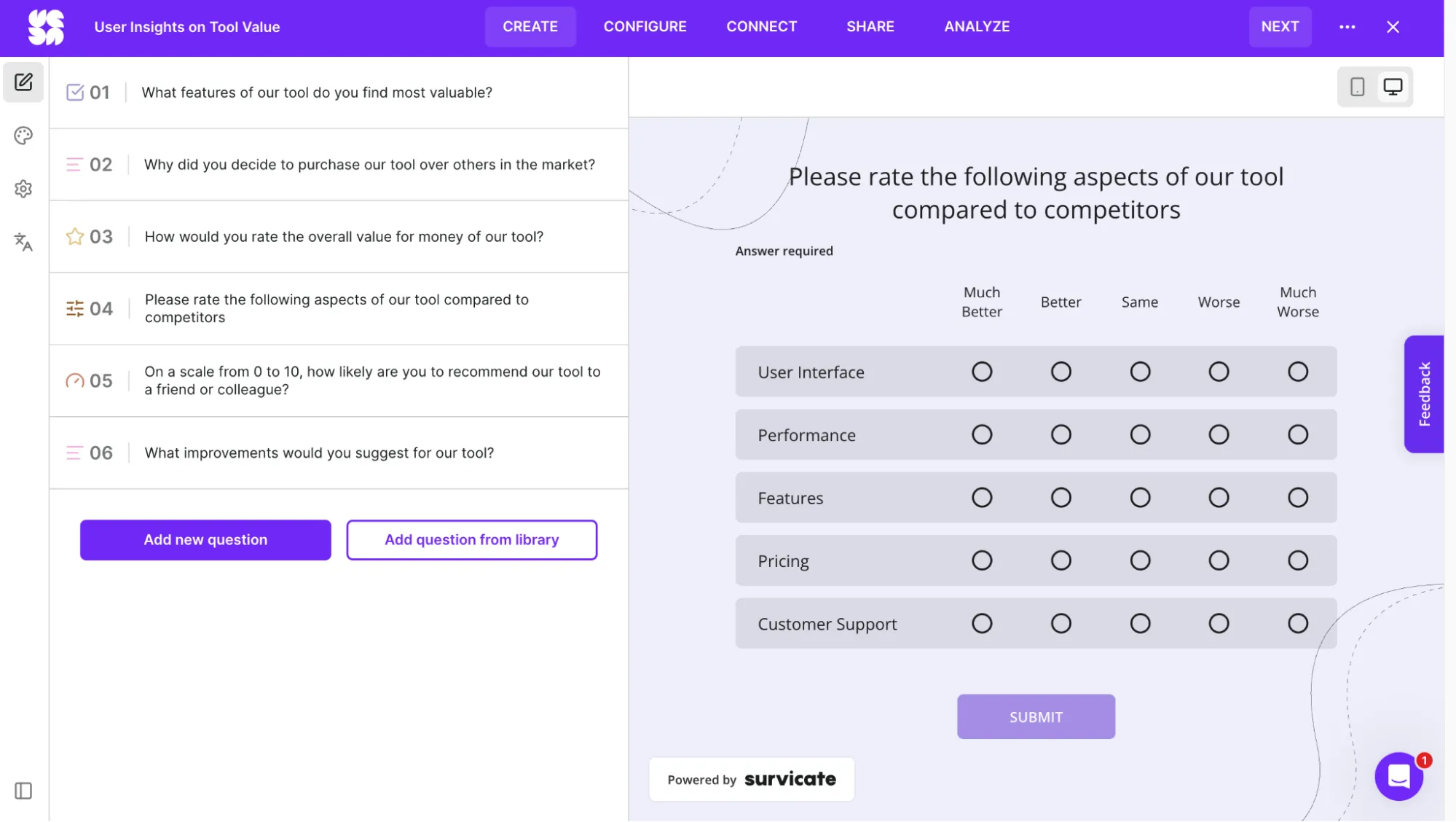

Here, though, AI proved to be a real time-saver. I asked ChatGPT to group responses from multiple interviews under categories aligned with my research questions, for example, “Perceived value of the tool” or “Sales process in the company.” Instead of manually copying, pasting, and reorganizing, I received neatly grouped notes that contained everything I needed to move forward with analysis.

The prompt I used

Role: You are a senior UX researcher with expertise in analyzing both qualitative and quantitative data. You are meticulous and thorough in your work.

Goal: To extract information from the transcript based on the categories I provide and organize it accordingly.

Context: You are conducting customer interviews to understand who they are, what their companies and sales processes look like, and what challenges they face. You need to generate answers for each category so the data can later be analyzed. Keep in mind that Livespace is a general-purpose tool, and the sales process is just one of its many features – make sure to differentiate between them in your analysis.

Output format: Bullet-point list under each category

Instructions:

1. Analyze the transcript with full attention to context for each category.

2. Identify matching statements and explain what information they reveal, including the meaning and why the user mentioned it.

3. Summarize these statements as detailed bullet points, starting with a clear observation and continuing with:

- What exactly did the user say?

- How does it impact their work?

- Why is this important to them?

- Does it highlight any change or challenge?

4. Do not stop at single-sentence answers – every point should be fully developed, containing both a direct quote and an interpretation in the context of the user’s work.

5. Add a timestamp (from the transcript) in parentheses at the end of each point.

6. Only include user responses – skip the researcher’s input unless it adds necessary context.

7. Do not invent insights – if something is not directly confirmed in the transcript, write “No direct information in transcript.”

8. Always distinguish between comments about the sales process and general functionality.

9. When analyzing problems, challenges, or solutions, focus on why they matter to the user and any previous issues they were able to solve.

10. Write each point so it’s fully understandable even without access to the original transcript – readers should grasp the user’s statement and the reasons behind it.

11. When I write “Create summary,” you will generate a full summary based on the transcript.

12. Expand on every point for all listed categories – do not skip or stop halfway.

13. Match the user’s statements to these categories: [list of categories related to].

Results

I pasted the categorized notes into Excel, organized by user, with a timestamp added to each point.

Example of AI-generated notes. User data has been modified:

Creating notes around research questions helped me organize the data. However, the whole process required expanding the prompt and pasting the information into Excel. Sometimes I had to remind ChatGPT to return to the instructions.

AI’s independent analysis

First, I asked AI to conclude on its own based on the notes I had created. Since I conducted the research myself and had already noticed some important patterns and directions, I could see that the AI’s analysis was quite shallow. It lacked a deeper understanding of the context of statements, the metaphors used, and the tone in which some observations were expressed. Some points were valid, but the analysis wasn’t complete.

The results were even worse when AI tried to analyze and summarize usability studies on its own, using transcripts. In usability tests, what we observe above all is behavior – hesitations, mouse movements, combined with spoken thoughts and comments. But people often say one thing and do another.

Every researcher has probably seen a user scan the entire screen, click on several wrong elements, struggle to complete a task, and then sum it up by saying, “The task was very easy, I had no problem completing it.” AI won’t catch this kind of discrepancy, which is why, in my opinion, it’s of little use in analyzing behavioral research.

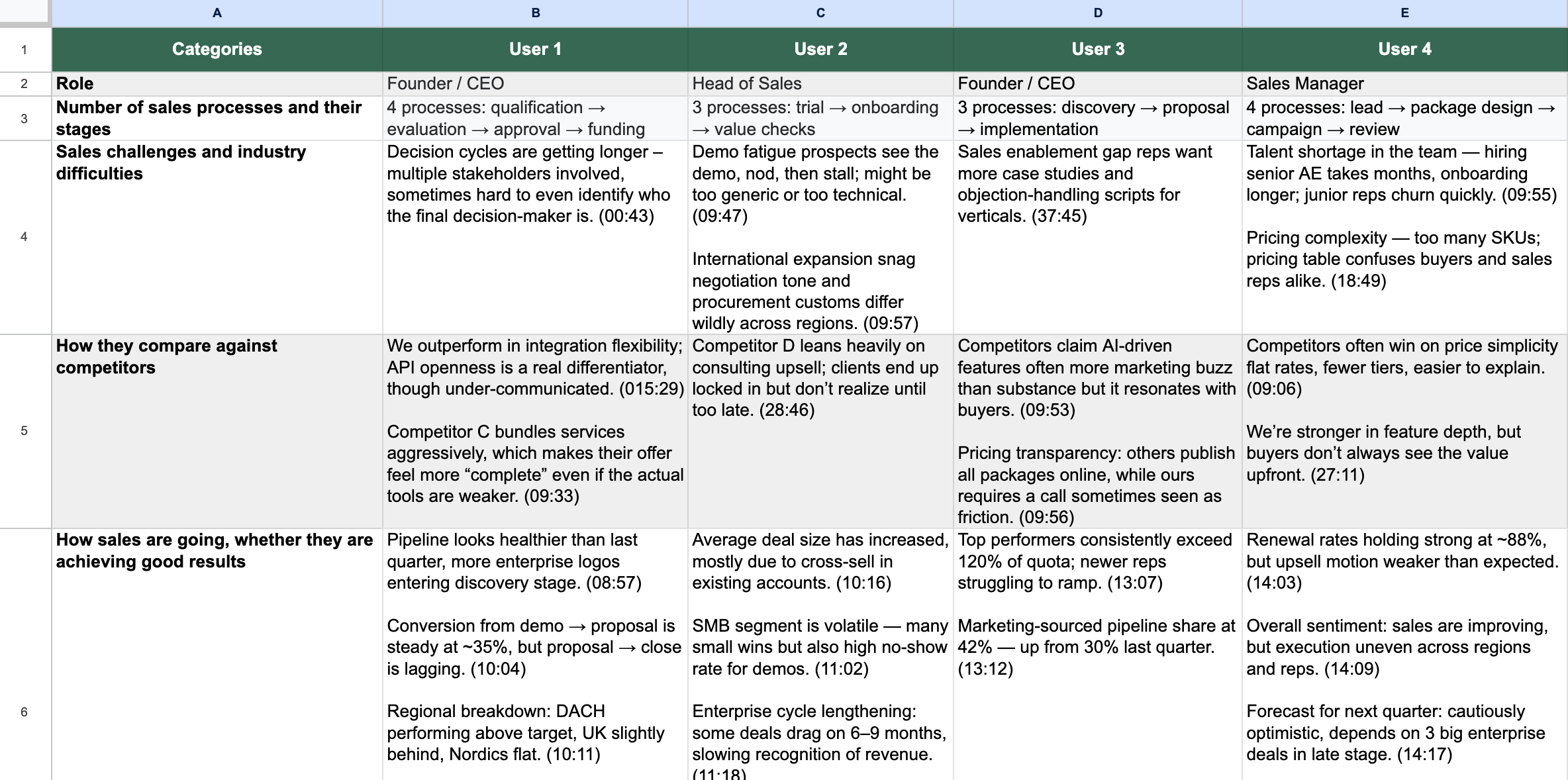

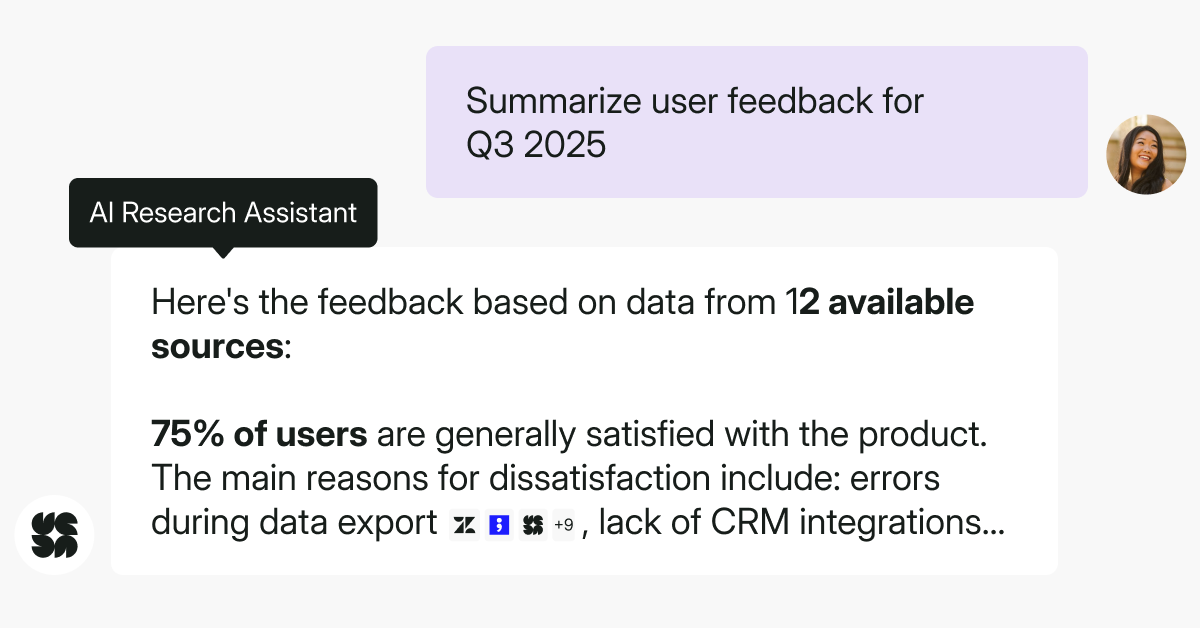

Co-analysis with Survicate

When analyzing data from a specific study, we usually focus only on that single source. In reality, though, there’s often a wealth of additional information that can broaden our perspective. Valuable insights might surface in NPS comments, recorded onboarding sessions, or churn surveys.

Ideally, we’d want to bring all of these together – following the principle of triangulation, which means combining different research methods to build a fuller picture.

The challenge, of course, is time and team capacity. Reviewing every possible data source that could enrich an ongoing study is rarely realistic. That’s where Survicate proved helpful.

In Insights Hub, I created themes aligned with my research questions, such as “Perceived value of the tool,” “Approach to sales,” and “Sales process challenges.” I then filtered the data to include only the last month.

This way, I was able to take into account all the relevant data for the current study and draw more accurate conclusions.

Talking with your data

If you’re the only researcher in your company, or running a study on your own, you probably miss the dynamic of cross-checking observations and connecting the dots with others. AI can help fill that gap.

I tested NotebookLM, where you can upload qualitative research data and literally have a conversation with your sources. AI can highlight overlooked fragments, suggest possible interpretations, or reveal patterns you might not have noticed.

A similar kind of dialogue is also possible in Survicate, especially in continuous research. You can bring in all the key signals from a chosen period and ask AI to surface recurring themes across the entire dataset.

Creating materials and presenting to stakeholders

When it comes to preparing summaries, AI can help enrich or streamline certain steps, but always in collaboration with us, never by doing the work entirely on its own.

Reports

AI can assist in drafting a report, but only once we’ve done the analysis ourselves. We can’t expect it to connect the dots, understand our goals, or grasp the full context.

A researcher friend recently shared how she uses AI: she feeds it her unstructured thoughts and conclusions, then asks the tool to organize them into a report.

Structuring information doesn’t require deep analysis, but it is time-consuming, so experimenting here makes sense, especially if you already have a trusted template.

Here’s an example of a simple template:

- Research goal

- Methodology

- Top 3 observations

- Full list of observations

Recommendations - Next steps

Clips

Have you ever heard a brilliant quote during an interview and then… couldn’t remember who said it or when? Manually searching recordings, or even transcripts, can be tedious. This is another area where AI helps.

With tools like tl;dv, you can ask AI to find the relevant parts of a recording. It not only summarizes the snippet but also links directly to the exact timestamp. AI doesn’t decide which quotes are important – it just makes retrieving them much faster.

Visuals

AI-generated images can add variety to a report without requiring custom design work or overused stock photos. For example, you can visualize personas that truly reflect your descriptions. I once created an image of a young data analyst – a remote worker, tech-savvy, exactly as described in the persona. The visualization captured the profile perfectly.

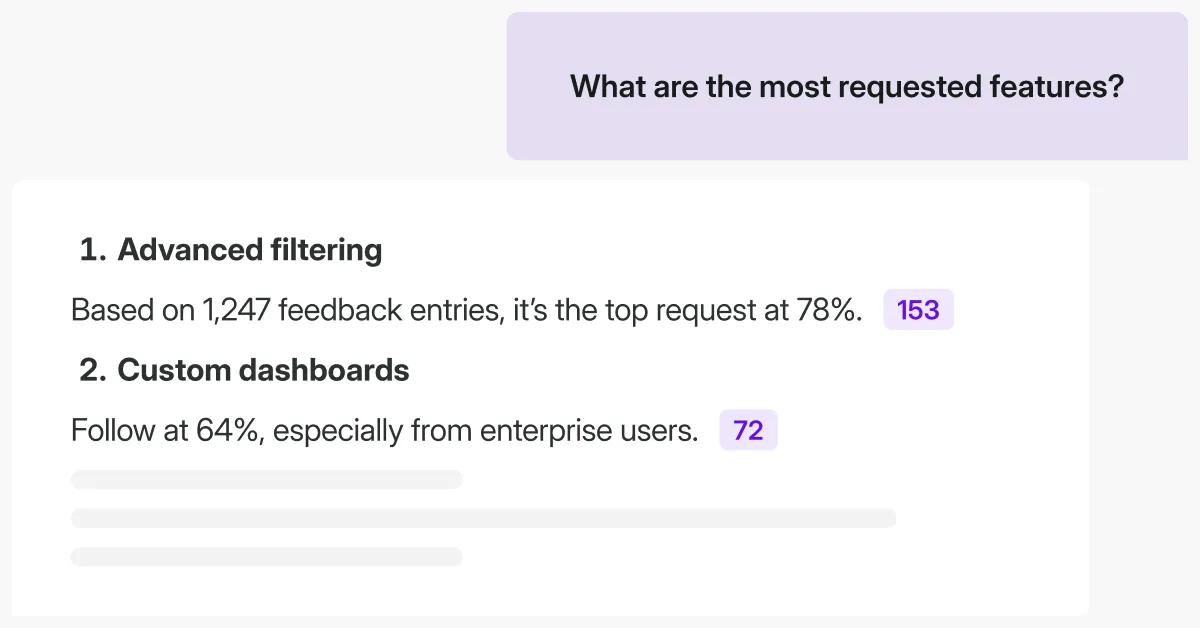

Where does AI prove most valuable?

AI has proven to be a valuable partner, though I don’t see it taking over research entirely, or drawing meaningful conclusions on its own, at least not yet.

The greatest value I’ve found is in creating research materials, such as scripts or surveys. AI solves the “blank page” syndrome by providing tailored templates that can then be refined to fit specific needs.

Another clear strength is organizing large volumes of data. AI can categorize notes according to research questions and group similar quotes by theme. This is a huge help for researchers, especially when the AI includes exact quotes and references, making the analysis more transparent and efficient.

AI can also enrich studies with additional data by pulling insights from multiple sources into a cohesive view.

Finally, when it comes to presenting findings, AI speeds things up by helping locate the right fragments in recordings, creating clips, and even generating visuals that align with your idea, without relying on custom illustrations or generic stock images.

Still, it’s essential to remember what AI really is: a set of language models. It can hold conversations and analyze transcripts, but it can’t build human relationships during interviews or detect the subtle gap between what people say and what they actually do.

Summary

We're in an experimental phase with AI in qualitative research, and that's exactly where we should be. Rather than rushing to automate everything or dismissing AI entirely, this is the time to test systematically and learn what actually works.

My experiments showed that certain stages of the research process benefit significantly from AI assistance – organizing data, creating initial templates, searching through large volumes of content, and handling mechanical tasks that traditionally eat up hours of our time. These are areas where AI genuinely enhances our capabilities without compromising the quality of insights.

But there are other stages that are better left to our human minds – building rapport with participants, interpreting the gap between what people say and do, understanding context and subtext, and making strategic connections between findings and business implications. These require the kind of nuanced judgment that remains distinctly human.

The key is being intentional about where we apply AI. Test different approaches in your own research context. Some tools that work brilliantly for one researcher might create more friction for another. Some tasks that seem perfect for automation might actually lose important nuance when handed over to AI.

We're still learning the boundaries of what AI can and can't do well in research. That's not a problem, it's an opportunity to thoughtfully integrate these tools in ways that make us more effective researchers, not just more efficient ones.

%20(1).avif)

.svg)

.svg)